Once I wondered: 'Why are RAW files of my camera Nikon D700 weigh that little? '. While looking for an answer, I found very interesting information.

About pixel fraud

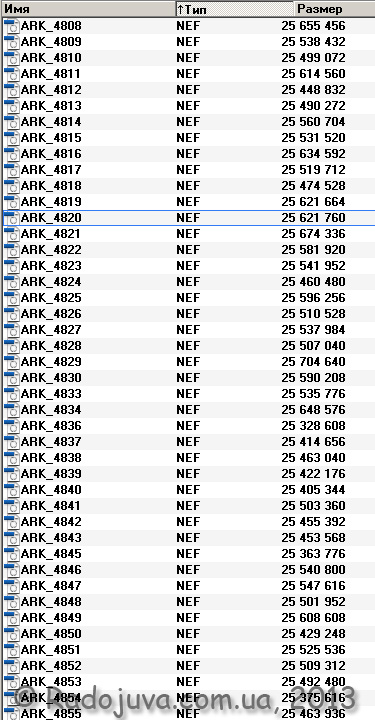

So, when shooting, I sometimes use the RAW file format, (Nikon calls it NEF - Nikon Electronic Format - Nikon electronic file format). Nikon RAW files have certain settings, I usually use 14-bit color depth with lossless or no compression at all. In general, NEF files with 14-bit color depth and without compression weigh about 24.4 MB. In the picture below, I showed the size of my files in bytes.

NEF file sizes on my Nikon D700 camera

As you can see, the files are almost the same size. Take, for example, the ARK-4820.NEF file, its weight is 25 bytes, or 621 MB. Bytes to megabytes are translated very simply:

25 624 760 / 1 = 048

I would like to draw your attention to the fact that the different weight of RAW (NEF) files is due to the fact that they carry not only useful 'raw' information, but also a small preview picture, as well as EXIF data module. The preview image is used to quickly view the image on the camera monitor. When viewing quickly, the camera does not require downloading a heavy 25 MB file, but simply pulls out a thumbnail preview image and displays it on its display. These pictures are most likely encoded using JPEG, and the JPEG algorithm is very flexible and for each individual thumbnail requires a different amount of information for storage.

14-bit color depth means that each of the three main shades encoded with 14 bits of memory. For example, when you click on the 'question mark' button on the corresponding camera menu item Nikon D700 You can read the following:

'NEF (RAW) images are recorded at 14-bit color depth (16384 levels). In this case, the files have a larger size and more accurate reproduction of shades'

The color is formed by mixing three basic shades - red R (Red), blue B (Blue), green G (Green). Thus, if we use 14-bit color depth, we can get any of 4 398 046 511 104 colors. (any of the four billion three hundred ninety eight billion forty six million five hundred eleven thousand one hundred and four colors).

It is easy to calculate: 16384 (R) * 16384 (G) * 16384 (B)

In fact, 4 billion is much more than necessary, for normal color reproduction, such a large supply of colors is used for easy image editing... And to encode one 'pixel' of the image in this way, you need 42 bits of memory:

14 bits R + 14 bits G + 14 bits B = 42 bits

My Nikon D700 creates images of the maximum quality of 4256 by 2832 pixels, which gives exactly 12 pixels (about 052 million pixels, or simply 992 megapixels). If encode images from my Nikon D700, without the use of data compression and compression algorithms, and with a 14-bit color depth, it turns out that you need to use 506 bits of information (we multiply 225 bits / pixel by 664 pixels). This equals 42 bytes, or 12 MB of memory.

Question: why is it estimated that about 60 MB of memory is needed for one image, but in reality I only receive files at 24.4 MB? The secret lies in the fact that the original RAW file does not store the 'real' pixels, but information about the subpixels of the CMOS matrix Nikon D700.

In the description for the camera you can see this:

Exposure from the instructions for the Nikon D700

That is, the instructions say about 'effective pixels' and about the 'total number' of pixels. It is very easy to calculate the number of effective pixels, it is enough to shoot in the JPEG L Fine mode and get a picture of 4256 by 2832 pixels, which is equal to the previously described 12 052 992 pixels. If we round up, we get the MPs declared in the instruction 12.1. But what is this 'total number of pixels', which is almost one million (1MP) more (12,87MP)?

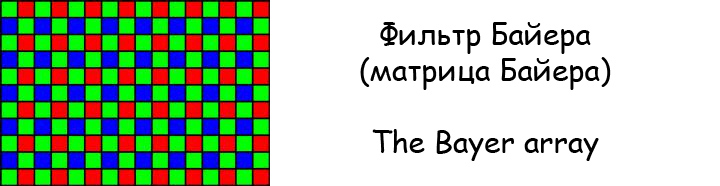

To understand this, just show what the photosensitive camera sensor looks like Nikon D700.

Bayer Filter

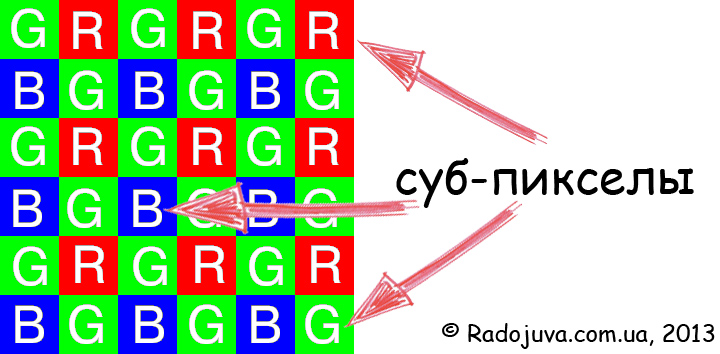

If you look closely, the Bayer Matrix does not create any 'multicolor' image. The matrix simply registers green, red and blue points, and there are twice as many green points as red or blue.

The 'not real' pixel

In fact, this matrix does not consist of pixels ('in the usual sense'), but of sub-pixels or register cells. Usually implythat a pixel is a point in an image displaying any color. On CMOS sensor Nikon D700 there are only sub-pixels, which are responsible for only three basic hues, on the basis of which the 'true', 'multicolor' pixels are formed. The Nikon D700 sensor has approximately 12 of these sub-pixels, which are referred to in the manual as 'effective'.

There is no 'real' 12MP on the Nikon D700 sensor. And the 12MP we see in the final image is the result of a hard mathematical interpolation of 12.87 Mega sub-pixels!

If averaged, then each sub-pixel is converted by algorithms into one real 'pixel'. This is due to the neighboring pixels. This is where it is hidden 'pixel street magic'. Similarly, 4 billion colors are also the work of the debayering algorithm.

The main idea of the article: sub-pixels and pixels. 12 million sub-pixels are sold to us for 12 million 'real pixels'.

To put it very roughly, marketers have called the sub-pixels of the Bayer filter 'pixels' and thus made a substitution of the meaning of words. Everything is tied to what exactly needs to be understood by the word 'pixel'.

Let us return to the calculations of the volume of files. In fact, the NEF file stores only 14-bit information for each sub-pixel of the Bayer filter, which is actually the same hue depth. Given that there are 12 such sub-pixels on the matrix (the approximate number is indicated in the instructions), then the storage of information obtained from them will require:

12 * 870 bits = 000 bits or 14 MB

And yet I never got the 24,4 MB that I see on my computer. But if we add data from the received 21,48 MB EXIF and JPEG PreviewImage then you can get the initial 24,4 MB. It turns out that the RAW file also stores:

24,4-21,48 = 2,92 MB of additional data

Important: similar calculations can be made for cameras using CCD sensors and uncompressed RAW files - Nikon D1, D1h, D1x, D100, D200as well as JFET (LBCAST) matrices - Nikon D2h, D2hs. In fact, there’s no difference CCD or CMOS - they still use the Bayer filter and subpexili for imaging.

But Sigma cameras with Foveon matrices have a much larger RAW file size for the same 12 MP compared to the CMOS matrix, which encode one real pixel using three pixels of the primary colors (as expected), this only confirms my reasoning. By the way, another appeared on Radozhiv interesting article и another one.

Conclusions

In fact, cameras using Bayer filter matrices (CCD, CMOS - it doesn't matter) have no declared real number of 'true' pixels. On the matrix there is only a set of sub-pixels (photo elements) of the Bayer pattern, from which, using special complex algorithms, 'real' image pixels are created... In general, the camera does not see a color image at all, the camera's processor deals only with abstract numbers responsible for a separate shade of red, blue or green, and the creation of a color image is it's just math tricks... Actually, this is why it is so difficult to achieve 'correct' color rendition on many CDCs.

Materials on the topic

- Full frame mirrorless systems... Discussion, choice, recommendations.

- Cropped mirrorless systems... Discussion, choice, recommendations.

- Cropped mirrorless systems that have stopped or are no longer developing

- Digital SLR systems that have stopped or are no longer developing

- JVI or EVI (an important article that answers the question 'DSLR or mirrorless')

- About mirrorless batteries

- Simple and clear medium format

- High-speed solutions from Chinese brands

- All fastest autofocus zoom lenses

- All fastest AF prime lenses

- Mirrored full frame on mirrorless medium format

- Autofocus Speed Boosters

- One lens to rule the world

- The impact of smartphones on the photography market

- What's next (smartphone supremacy)?

- All announcements and novelties of lenses and cameras

Comments on this post do not require registration. Anyone can leave a comment. Many different photographic equipment can be found on AliExpress.

Material prepared Arkady Shapoval. Training/Consultations | Youtube | Facebook | Instagram | Twitter | Telegram

RAV is a pandora's box, and no one knows what is stuffed there. only one thing is known all this is interpolation

Well, at least the manufacturers of Capture One, Lightroom (camera raw) and heaps of open source converters have an idea about this.

The Nikon D700 matrix has about 12 870 000 such sub-pixels, in the instructions such pixels are called 'effective'.

12.87 total, 12.1 effective, 12.87 subpixels, 12.1 pixels.

Thanks for the review, very exciting and interesting :) (Like)

It would be interesting if you could write these questions to the official representation of Nikon ... Arkady, have you thought about it?

With CCD, as I understand it, things are similar?

Yes, it does not matter CCD or CMOS, since there is a Bayer filter, then all pixels are considered.

“But Sigma cameras with Foveon sensors have a much larger RAW file size for the same 12 MP compared to CMOS sensors” - I don't know why more, but the essence is the same. They decided that since all manufacturers are lying, why are we worse, so their actually 4-megapixel matrices are called 12-megapixel (since there are three subpixels in one pixel at different depths, then marketing should not remain silent).

The point there is completely different. There are 3 layers, on each layer there are only certain subpixels (blue layer, green layer, red layer). The number of pixels for such matrices is written (for example, for Sigma SD1) 15,36 million (4800 × 3200) × 3 layers. Those. there really is a total of 15.36 million PIXELS. And the image there is much sharper.

In order to have the same resolution in the CMOS matrix, the latter must have:

15.36 * 3 * 0.75 = 34.56 million subpixels

0.75 - conversion factor - 3 subpixels in Foveon versus 4 in CMOS

Yes, that's just the same. “Like the manufacturers of Bayer photosensors, which indicate the number of single-color subpixels in the matrix characteristics, Foveon is positioning the X3-14.1MP sensor as a“ 14-megapixel ”(4,68 million three-sensor“ columns ”). This marketing approach, which refers to a single-color element as “pixel,” is now common in the photography industry. ”

By the way, and no one knows what the Bayer filter structure of the Nikon D1X with its wonderful matrix?

It’s exactly the same, RGGB, only the pixels are not square, but rectangular (elongated in height).

There is an excellent programmulink RawDigger (http://www.rawdigger.ru/), I really didn’t figure it out, but it’s a very useful thing for picking in ditches, with its help you can understand which pig the manufacturer put)))

Well, the site of the wonderful person Alexei Tutubalin (http://blog.lexa.ru/) a lot of interesting things about picking in ditches

extremely unusual and interesting article ... thanks!

What a twist! I naively thought that if a pixel consists of three color cells, then these 12MP cells should be 36M ...

Although there is another interesting point. After all, when viewed on an LCD monitor, each pixel also consists of 3 cells. But at the same time, the real number of pixels in length / height is written on the monitor, not the number of cells. The only thing that cunning here are camera manufacturers.

When viewing an image from a TV monitor, you perceive three subpixels of three colors as one pixel of some other color. There are three rectangles, usually with a height equal to a square pixel and a width of one third of this square - if you put them side by side, you just get a square.

In a camera, the matrix consists of an array of photosensors that register not COLOR, but the intensity of light. In front of each photocell, there is a translucent glass - red, green or blue. Glass transmits only 1/3 (approximately) of the visible spectrum. If we remove these filters, we get a clean b / w image. If we put the filters on, we will get three sets of b / w images, similar to the channels in Photoshop. Only they will still be grainy in addition to everything due to the peculiar arrangement of the elements on the matrix.

The camera stretches the image from each channel, inserts the missing pixels and collects from them the RGB image that the monitor displays to us. All raw converters do the same thing instead of the camera, sometimes better due to the greater processing power.

A bit wrong. There are monitors with four pixels and RGBW. On AMOLED there is generally a terrible picture like a buyer.

I have a Canon 50d with 15mp and a RAW file size of 13 to 27 mb.

Hmm ... I have a canon 550d and also have different RAW sizes, and they are very different, as it does not agree with the article ...

This is because RAW with compression is used on the 550D, on the D700 you can receive RAW files from 10 to 25mb in the same way, for which it is described here https://radojuva.com.ua/2012/08/12-bit-raw-vs-14-bit-raw/... Therefore, in this article, I focus on the fact that I used the “no compression” setting in the camera menu.

What about my 50d then? On it I always shoot in RAW and it is without compression - 14 bit.

and what should be wrong with him?

Well, I wonder why all the files are of different sizes, but as I understand it, all the files are almost the same size.

Canon 50d uses RAW compressed by default, the output file size is different for each individual shot. If you put “with compression” on the d700 from this article, then the files will also have different sizes. The same applies to the younger Nikon and Canon models, where you cannot set RAW without compression. This trifle differs professional cameras from amateur ones.

That's what I googled

Raw camera files for digital cameras typically contain:

- discrete values of the voltage of the matrix elements (before interpolation for matrices using arrays of color filters)

-metadata - camera identification;

-metadata - technical description of the shooting conditions;

-metadata - default processing parameters;

-one or several variants of the standard graphical representation ("preview", usually medium-quality JPEG), processed by default.

Often the Raw file also contains a fairly large jpeg preview, which increases the file size.

Source: Wikipedia

http://ru.wikipedia.org/wiki/Raw_(формат_фотографий)

Arkady, you started so well and finished so badly.

“Considering that there are 12 052 992 such sub-pixels on the matrix, then the storage of information received from them will require:

12 052 992 * 14 bits = 168741888 bits or 21 092 736 bytes or 20,11 MB "

You wrote that there are 12 subpixels. Real photosensors.

Therefore:

12 * 870 bits = 000 bits = 14 MB. This is the first.

Second. Instead of 14 bits, storing 2 bytes (16 bits) is a luxury that nobody needs. Each four subpixels already have two green ones, i.e. 28 bits, it is half the size of a "quad" pixel.

This is in computer graphics, when a 16-bit color representation is used, 16 by 3 is not completely divisible (3 color components), the last 16th bit is used for green due to the eye’s most sensitivity to this color.

Thus, (24.44-21.48) MB is used to store what you listed (including what is described on Wikipedia).

In the last calculation, at first I really had 12, but I really can't check how many there are, because I still used only those values that I can see on my computer. The bottom line doesn't really change.

In any case, 12 052 992 is a virtual number and is obtained exclusively after debayering, and in RAW there is no debayering yet.

I returned the article to its original form, look, is it better?

Yes. And it's good that we removed the statement that the “coordinates” of subpixels must be stored in RAW. Indeed, in order to understand which subpixel in a given place of binary information is encoded, it is enough to know their order, the width of the Bayer array and the height. In a cycle of reading bits, this is very easy to do.

Article is super!

Arkady! Likewise, marketers cheat with LCD screens on cameras. For example, for my D7000 it is indicated that the screen has 921600 dots, it would seem that this is a large resolution, but in fact, the screen has a normal VGA resolution (640x480), and such a large number of dots is obtained by the formula 640x480x3, where 3 is the number color subpixels

and the subpixels themselves do not take up the entire area of the matrix, it's only schematically that they are so 'bold' :)

You are wrong! The screen resolution is correct! 921600 points! 1176 × 784 px

In the book by Tom Hogan, a recognized expert on Nikon cameras "Complete Guide to the Nikon D7000" on pages 236-237, it is stated that the screen has a VGA resolution of 640 × 480 pixels, or more precisely, an array of 1920 × 480 pixels

good evening. Arkady, I've never had a professional camera. Question: - that in reality 14 bits of color convey a picture much more accurately than 12?

14 bits are more suitable for subsequent processing, and the pictures on the monitor can not be distinguished from each other. 14 bit is available not only for professional cameras, for example, D5100, D5200 also use 14-bit color depth, but only with compression, more detailed here https://radojuva.com.ua/2012/08/12-bit-raw-vs-14-bit-raw/

Arkady, thanks for the article!

Everything seems to be fine, but in my opinion, something does not add up ...

if there are 700 MegaSUB pixels on the D12, then how wonderfully is the picture formed on the screen with a resolution of 4256 by 2832 ??? if 12 was the number of megaSubpixels, then the final resolution would have to be different!

Indeed, each point of these 4256 by 2832 is formed by 3 (4) subpixels!

If I am mistaken, please correct.

Actually, this is the debyerization algorithm, when one monochrome pixel becomes color by reading information from neighboring pixels. In the article, I have repeatedly mentioned that the algorithm makes pixels out of subpixels, thus inflating us in something.

In the D5100, you can disable the compression of RAW files using broken firmware. I have it disabled. Files are obtained on average at 32 KB. :)

Regarding the 14 and 12 bit article:

"The last 2 bits - why are they?"

http://www.libraw.su/articles/last-2-bits.html

therefore, the Super CCD matrix produces color an order of magnitude better than standard CMOS.

and the old Kodak provides colors better than any Nikon.

There is one :)

Arkady, thank you for your work, I do not miss a single article of yours. I found out a lot of useful things, thank you very much again!

Hello. I was also interested in this issue, and here is what I unearthed.

First, the debayering algorithm. In its essence, it is quite simple - a 2x2 sub-pixel square is taken from the matrix, one red, one blue and two green ones fall into it. Based on this, the color of the pixel is calculated. The square is then shifted to the right by one sub-pixel, and the RGGBs are included again. Thus, the square “runs through” the entire matrix, and the output is a picture of almost the same resolution as the sub-pixel matrix. This is just simplified, in fact a little more complicated, and each company has its own know-how how to avoid chromatic distortion, blurring, and so on during the debayering process.

Where do inefficient pixels go? A little bit about it is told on dpreview: http://www.dpreview.com/glossary/camera-system/effective-pixels . In short:

1. Some of the pixels are lost for debayering - one pixel on each side. Try it yourself, for example, a 4x4 square (16 sub-pixels) contains only 9 2x2 squares (the resulting pixels)

2. Light does not hit some of the pixels, and they are used to obtain data on the behavior of the sensor in the unlit part of the matrix - shadow illumination, maybe the level of noise, some other information

3. And some of the sensors disappear due to design features - the matrix is just too large for the given camera model and part of the area is not used. And it is expensive to manufacture a separate matrix of the required size for this camera personally.

And in general, if you search for the phrases "ineffective pixels", "where do ineffective pixels go", then Google / Yandex finds much more than a search for "effective" :)

You also miscalculated the final file size. Yes, absolutely all information from the matrix is stored in RAW, and in the most raw form. The camera's processor reads 14-bit data from the matrix into a 2-byte memory cell, and in this form writes it to a file. Most likely - linearly, as it reads, without any special file format. Thus, each sub-pixel does not take 14 bits of data, but all 16, and the resulting file size is 12 x 870 = 000 bytes, or 2 megabytes. Add metadata here (shooting conditions, camera settings, camera model, firmware version), plus a preview picture, you get just your 25.

Two bytes do not roll. You indicated that this will result in 24,54, and this is already larger than my 24,4MB file. Where else to add metadata?

Yes, I'm sorry, I really missed it. As it turned out, even within the same company, data can either be packed in packets, then each sub-pixel will really occupy only its own volume, or stored separately, then they expand to two bytes.

“Firstly, according to the debayering algorithm. In its essence, it is quite simple - a square of 2x2 sub-pixels is taken from the matrix, one red, one blue and two green ones fall into it. "

Not really. More precisely, not at all :) Processing is carried out for each plane separately - for red, blue and green. In this case, green has twice the resolution. The task comes down to restoring three pixels for each red and blue block and two pixels for a green block.

The algorithms used are very different and often quite complex. Starting from the fact that the interpolation is performed on blocks of larger size (5x5, 7x7 pixels, not a 2x2 square) and ending with calculations based on the brightness component obtained from pixels of all colors.

You guys are now very reminiscent of a crowd of panicked Talmudic interpreters arguing about the meaning of the location of an ancient letter in the middle of a word from a phrase of some sage on a topic, supposedly when rearranged to another place completely changes the meaning of expression and accents ... Maybe some of you don't need to do photography at all, huh? Well, there are race car drivers, and there are car repair shops, seamstresses and sewing needle masters ...

Comrade, why not enlighten yourself in the technical field, is it really bad?

So that a professional race car driver does not understand what he is driving and how his device works, this is not conceivable.

The cameras are now made by engineers with marketers, not artists and photographers.

Should I admit that a miraculous face is formed in a marvelous box at the behest of our Lord Jesus Christ? And this spiritualized news to continue his occupation, without overshadowing the meager mind with forbidden fabrications?

You said nonsense! You are a brave person: Not everyone can easily "freeze" nonsense and not be embarrassed by it in the least. Bravo!

To the exorcist, urgently !!!

This is exactly what it is :) Trying to do photography and generally not even remembering about setting the light and lighting for shooting is completely stupid - if you do not “see” what to shoot, there is no need to talk about an instrument - “camera and lens”. Among the thousands of comments on the project there are only a few with questions “about light” and more than one sensible answer to them.

I do not understand anything.

“But what are these 'effective pixels', which are almost one million (1MP) more (12,87MP)?

Maybe the total number of pixels, but not effective in this proposal was meant?

Thank you for the article. He made an important point for himself. Debyerization is essentially interpolation. But interpolation does not do miracles, new information is not taken from anywhere. Those. in fact, we can reduce the jpg, bmp (not raw) photo back by 2 times in width and length, and thereby reduce the photo files by about 4 times, while it will contain the same amount of information as the original.

Those. All these introductions of marketers, in addition to inflated numbers, lead to an artificial inflated file size and, as a result, to the cost of new hard drives, flash drives, memory cards, new computers (large files are more difficult to process), Internet traffic, etc. these marketers…. ...

Debayering and interpolation are not the same thing. Yes, there is less color information than the final file, but the luminance information is nevertheless collected by all the pixels. Manufacturers use reconstruction algorithms more complicated than “increase by 2 times”, so it is not so easy to say that 4 pixels form one final one, everything is much more complicated. By reducing, as you say, the image by 2 times, you will not restore by interpolation what the camera produced after debayering. Yes, the sharpness in the reduced image will be higher, but this does not mean that we have achieved this without loss of information.

On account of the fact that debayerization is not the same as interpolation. Suppose the matrix fotik contains 10 * 10 cells, this is 100 bytes of information. They make an RGB photo from it containing already 10 * 10 * 3 = 300 bytes of information. Where did the debayerization get 200 bytes of new information about the subject? She sinterpoliroval it from the original 100 bytes, it is simply cunningly veiled. These 200 bytes cannot contain any new information about the subject.

Try to compress any photo 2 times and then restore its original size, for example, by bilinear interpolation (xnview can do this). Do you see much difference between the original and this?

For a start, study at least roughly the algorithms of debyerization, and then tell us that the camera came up with 200 bytes of information. She did not come up with these 200 bytes, but calculated, restored on the basis of data from all pixels. If the camera did a debayerization. a decrease in resolution, this would lead to some loss of detail.

If I reduce the photo by 2 times, and then increase it, the difference will be. And the better the camera and optics, the greater the difference (of course, a 16 megapixel frame from a soap dish will be indistinguishable when resizing 4 times, but we are not talking about that).

Also, do not forget about the noise and the same JPEG, in which all soap dishes and a lot of mirrors are shot - there, compressing a lower resolution image will lead to a greater loss of detail, you can check by saving a 2 times reduced image in JPEG, and then enlarging it back.

>> She did not invent these 200 bytes, but calculated, recovered based on data from all pixels

This is interpolation. I can take any picture and not come up with, but calculate and restore by bilinear interpolation any number of pixels. Only the sense of these pixels is 0. We know about the object only 100 bytes, and the remaining 200 are our exhausted guesses from the finger.

>> If the camera did debayering downsampling, there would be some loss of detail.

Then instead of 10 * 10 you get 5 * 5 * 3 = 75 bytes i.e. 25 bytes would be lost. Those. 2 green channels would be averaged into one, the loss is small. Of course, it’s better for the marketer to inflate the size to 300 bytes.

You can translate the matrix of 10 * 10 cells into a 10 * 10 * 3 photo honestly, so that each pixel would be the same color as the cell, i.e. in each pixel, only red, only green or only blue subpixel would burn, and the remaining 2 would not burn. Although this photo takes 300 bytes, it only stores 100, the remaining 200 are empty zeros. But this is an honest translation. Make from it later what you want, at least debayerization at least interpolation. But then the fraud will be very obvious, enlarged the picture, and there each pixel is only one shade. Therefore, manufacturers actually blur this photo a bit, and each pixel is averaged with its neighbor, and they call it the clever word debayerization, although this is not even interpolation, but a dull blur. Now we see pixels of all shades and the deception is not obvious. As soon as the photo had 100 bytes of useful information, it remained, the remaining 200 is garbage.

Yes, several people already explain the same thing to you, but you are on your own, garbage and all things :)

Either learn how debayering really works, or resize your photos 4 times and keep thinking that you have nothing to lose. I can advise you to buy a Nokia 808 PureView phone, there is just what suits you - a matrix of 41 megapixels, but the emphasis is not on the resolution, but on the high quality of 8 megapixel images by combining neighboring pixels. This is where the most honest megapixels will be for you :)

we will overwhelm the manufacturer with claims for falsification) not a single judge will cut the topic ... and the article is strong, makes you think.

And where, in fact, is falsification? :)

The camera has 12 megapixels, there are also about 12 million light-sensitive cells, the image also contains 12 million points - everything corresponds to the declared.

everything is very interesting and informative, many thanks to the author for his work, and let everything, in his life, be good-hum